Key Point of Azure Kubernetes Service— 1

Azure Kubernetes Service (AKS) is the name given to the Azure-managed service of the Kubernetes tool, which has become de-facto in container orchestration. In this article, I will try to mention the key points that need to be considered during the installation and use of AKS, with the information I have gained from Microsoft's own resources and actual experiences.

Section 1 — Cluster Isolation and Authorization Management

In general, companies consider the application they choose as a pilot when migrating their applications onto the cloud and design the platforms accordingly. However, while migrating your applications both in the cloud in general and in the AKS specifically, you should assume that more than one application will be migrated to the cloud and consider how these applications will be isolated physically or logically.

Similarly, there will be dozens of different teams working in the same application and Dev-Test-Prod environments for each team. You can use the following features to make these distinctions in AKS:

- Scheduling: You can use node selectors, taints, tolerations, affinities for special scheduling rules such as which node a pod will run on, which node it will not run on, which pod will be on the same node or not.

- Networking: NetworkPolicy Means the use of network policies to control the flow of traffic in and out of pods.

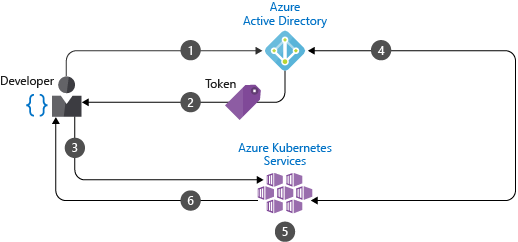

- Authentication ve authorization: Azure Active Directory is recommended for Authentication. By mapping your own Active Directory with Azure AD, you can create the authorizations through your own AD users. Authorization issues such as which users will access which namespaces and who will be in the admin role in the cluster can be managed by RBAC. The pods' access to Azure resources is managed by Pod Identities and secret type resources.

- For container security, image scanning tools and runtime vulnerability monitoring tools are used. So how and in what condition do we perform cluster isolation?

kind: NetworkPolicy

apiVersion: networking.k8s.io/v1

metadata:

name: backend-policy

spec:

podSelector:

matchLabels:

app: backend

ingress:

- from:

- podSelector:

matchLabels:

app: frontend

For different teams to work together and isolated on AKS, it is recommended that they work in different namespaces on the same cluster. This structure is an example of logical isolation.

If the same application is for Dev, Test, Prod environments, it is recommended to physically isolate at least Prod, Non-Prod, that is, to have at least two separate clusters. In this way, the probability of a code or configuration setting in the test environment affecting Prod applications is minimized.

Let us say you have different applications, and you want to put them all on AKS. In this case, you can run your applications in the same cluster or in a different cluster, taking into account the matters such as resource consumption of your applications, the size of your cluster, the need for Disaster Recovery, and data sensitivity.

In the remainder of the article, we will cover the above topics in a little more detail.

Basic Scheduler Features

- Let us assume you isolate different teams into different namespaces with the help of RBAC and Azure AD. To prevent a bad code written by a team or user from using all the resources in the cluster, you need to limit the namespace resources. It is recommended that ResourceQuota and LimitRangeare used for this purpose. You can review the following article on how to manage resource.

- Resource Management in Kubernetes In cases such as maintenance or cluster upgrades, AKS empties your nodes one by one, that is, it kills the individual pods on the node to be upgraded and operates them on a different node. You can use the Pod disruption budgets so that effect of this on your application is minimized: In cases such as cluster upgrade, hardware error, VM deletion, PodDisruptionBudget type resource is used to determine the minimum number of pods:

apiVersion: policy/v1beta1

kind: PodDisruptionBudget

metadata:

name: nginx-pdb

spec:

minAvailable: 3

selector:

matchLabels:

app: nginx-frontend

- As indicated above, the resource management of namespaces should be performed by ResourceQuota and LimitRange. The ResourceQuota does not allow your pods to operate without limit and request definitions. But what if ResourceQuota is not defined? To check whether these definitions are missing, it is recommended that the developed kube-advisor by the Azure team is used.

Advanced Scheduler Features

- You can reserve a node only for running certain applications. For example, your artificial intelligence applications may need to run on GPU-using nodes, and you do not want the pods of other applications to run on these expensive nodes. For this, you can add taints to Node. Scheduler will not allow any pod that does not tolerate this taint to operate on this node. A taint command example:

- Taints are used to prevent pods to run on a node. The Node Selector deals on which node a pod will run. But Node Selector does not make any restrictions for other pods. If we proceed with the same example, if you use Node Selector instead of taint for the node you reserved for artificial intelligence, the pods of your artificial intelligence application will operate on the right node, but other applications can also use the same node. For a pod to use a node selector, that node must have a label. For example:

kubectl label node aks-nodepool1 hardware:highmem

A pod specification to define the nodeSelector that matches the label set on a node:

kind: Pod

apiVersion: v1

metadata:

name: tf-mnist

spec:

containers:

- name: tf-mnist

image: microsoft/samples-tf-mnist-demo:gpu

nodeSelector:

hardware: highmem

- You can think of Node Affinity as a more flexible version of Node selector. Node affinity determines the preferred node for the pod if "preferred" statement is used. However, if there are too many pods on that node or if the scheduler cannot find a node of that type, the pod is operated on other nodes.

kind: Pod

apiVersion: v1

metadata:

name: tf-mnist

spec:

containers:

- name: tf-mnist

image: microsoft/samples-tf-mnist-demo:gpu

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: hardware

operator: In

values: highmem

Note:The preferredDuringSchedulingIgnoredDuringExecution part indicates that nodeAffinity is valid during scheduling, but invalid during execution, for example when a label change occurs. If you want this affinity to be strictly enforced, you can use required instead of preferred (requiredDuringSchedulingIgnoredDuringExecution)

-

Affinity ve anti-affinity

are used to determine the pods that will work (or not) with other pods on the same node.

kind: Pod

apiVersion: v1

metadata:

name: tf-mnist

spec:

containers:

- name: tf-mnist

image: microsoft/samples-tf-mnist-demo:gpu

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: security

operator: In

values:

- S1

kubectl taint node aks-nodepool1 sku=gpu:NoSchedule

And the definition of the pod we want to allow this taint to tolerate, that is, to run on that node:

kind: Pod

apiVersion: v1

metadata:

name: tf-mnist

spec:

containers:

- name: tf-mnist

image: microsoft/samples-tf-mnist-demo:gpu

tolerations:

- key: "sku"

operator: "Equal"

value: "gpu"

effect: "NoSchedule"

Authentication and Authorization

- With Azure Active Directory, you can easily manage authentication within your AKS cluster. You can manage authorization via these roles by binding the ClusterRole and Roles with Azure AD users and groups.

- The ClusterRole and Role relations in the Kubernetes world, is the named Role Based Access Control (RBAC). It is recommended to manage the authorities collectively by assigning role bindings to the groups you define on Azure AD. Here is an example role definition that gives full Access to resources in the finance-app namespace:

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: finance-app-full-access-role

namespace: finance-app

rules:

- apiGroups: [""]

resources: ["*"]

verbs: ["*"]

A sample binding definition to bind this role with usergroups:

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: finance-app-full-access-role-binding

namespace: finance-app

subjects:

- kind: Group

name: [UserGroupId]

namespace: finance-app

roleRef:

kind: Role

name: finance-app-full-access-role

apiGroup: rbac.authorization.k8s.io

- Pod identity is a managed identity solution developed by Azure. It enables pods on AKS to securely access to the services managed on Azure (Azure Cosmos DB, Azure SQL, etc.). Currently, it only supports Linux applications. Its use is recommended to enable your applications on AKS to access the Azure services. Let us leave it here for now. In the next article, I will talk about security, image management, network and storage.