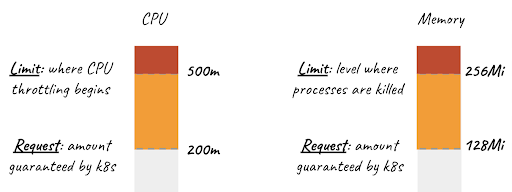

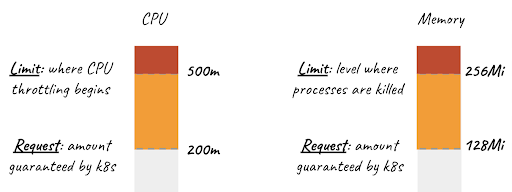

Request

Request determines the minimum resource a container needs to operate on the Kubernetes cluster. For example, consider a 3 – node cluster. Let us assume that the amount of memory available in nodes due to the current usage is as follows:

Node1: 1Gi

Node2: 2Gi

Node2: 2Gi

If we set the pod's memory request as 3Gi, the pod will only work on Node 3. If we set the request as 5G, the pod will not operate on any node and your deployment will remain in pending status.

Limit

Limit determines the maximum limit of the resource that a container will use. For example, let us say you set the memory limit of your pod as 4Gi and the memory usage of this application has reached 4Gi under load. Kubelet will soon restart your pod with the OOMKilled error.

There is no default request or limit defined for your applications that run on Kubernetes. You are expected to define this request and its limits. As the best practice, it is recommended that an application with no defined memory limit should not run on the cluster. Otherwise, in case of a memory leak or overload, you may consume the memory of the entire cluster and all your applications will become unresponsive.

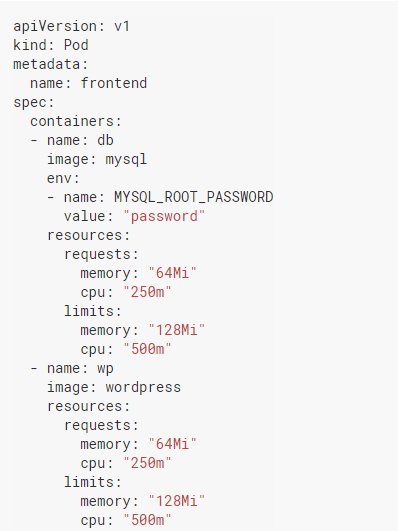

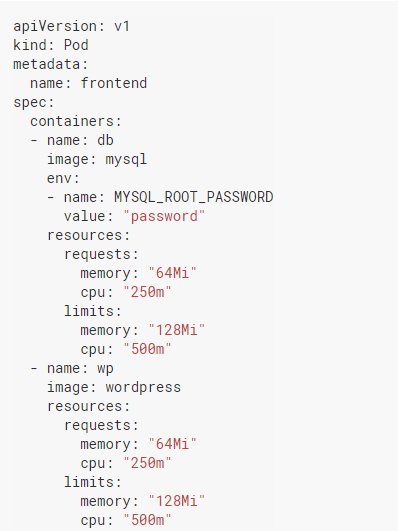

So how do we define request and limits? Let us examine through the following example:

When you look at yaml above, one of the first things you will notice is that requests and limits are defined in containers, not pods. Containers in the same pod can have different limits and requests. However, we can think of the requests and limits of the pod as the sum of the requests and limits of the containers it contains. The limit values must be equal to or greater than request values. If you try to define the limit less than the request, Kubernetes will not allow you to create that pod.

It is that easy to define limits and requests on Kubernetes. So, how can we guarantee that requests and limits are defined in a large cluster where many software developers are working and how do we determine their limits? We can find the answers to these questions in Kubernetes' ResourceQuota and LimitRange definitions.

ResourceQuota

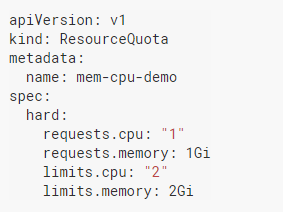

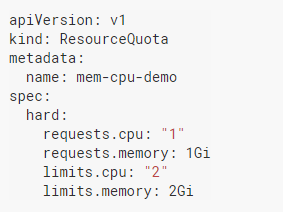

It is a resource type used to apply quotas on a namespace basis. The limit of the sum of memory, CPU limit and requests to be defined in a namespace can be determined with ResourceQuota. However, the maximum number of pods, services, configmaps, PVCs etc. to be defined in a namespace can be determined. But since our topic is cluster resources, we will not include those restrictions in this article.

Let us examine the following yaml for quota definitions on a namespace basis:

This simple example brings some restrictions for the namespace. For example, after this quota is defined, a pod that does not have defined requests and limits for CPU and memory in that namespace cannot operate. The sum of total CPU requests of the pods cannot exceed 1, and the sum of CPU limits cannot exceed 2. Total memory requests cannot exceed 1Gi and total memory limits cannot exceed 2Gi.

Imagine that you have an application named XYZ and accidentally typed too many memory requests into the deployment yaml of this application, and these containers reserve most of the memory in the cluster - even if the application does not use too much memory. Other applications in the cluster will not be able to operate or the operating applications will start getting OOMKilled errors. To prevent this, you can determine the maximum requests and limits to be used on the basis of namespace with the help of ResourceQuota.

Again, I would like to emphasize that ResourceQuota is not interested in the memory or CPU used. It deals with the defined total limits and requests. For example, instant memory usage in a namespace may be very low. Therefore, there may be enough memory to be assigned to your new pod. However, if the sum of memory limits and requests of pods in the namespace is high and will exceed the values you defined in ResourceQuota with your new pod, your pod will not operate.

LimitRange

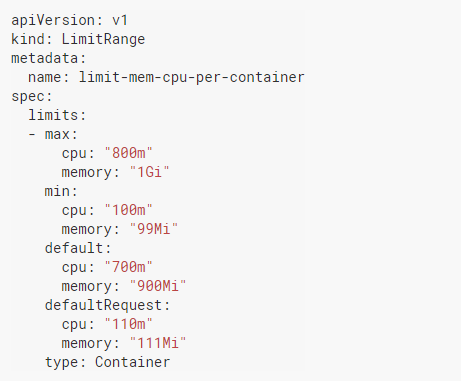

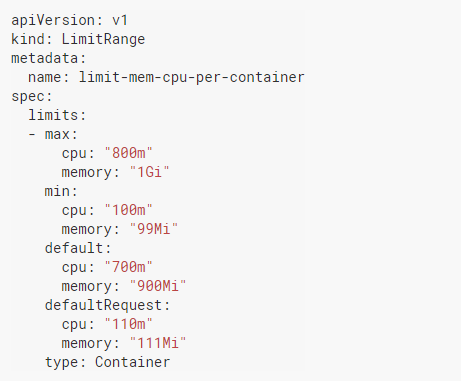

Like ResourceQuota, the LimitRange also deals with the request and limit values defined on the basis of namespace. But while ResourceQuota looks at the defined total values, LimitRange deals with the max and min values that can be defined per container (or pod).

It is also used to define default requests and limits for containers with no request and limit definitions. Let us go through the below example:

The above definition tells us a few things. Since it is chosen as a type container, we can understand that this limitRange performs control on container basis. The Max and Min values determine the limit and request limits of the containers, respectively. So let us say you wanted to create a pod and define a CPU request of less than 100m to your container in the pod. Since the defined Min CPU value is 100m, this means your pod will not operate.

Let us go back to the XYZ application that I gave an example about in ResourceQuota. The memory limit of the application is overwritten. However, since we set the max limit to be defined in the container in the LimitRange, the pod will not operate, and we will protect other applications in the cluster.

Like ResourceQuota, the LimitRange deals with defined limits and requests, not instant values.

TL;DR

In your Kubernetes cluster, you do not want your applications to consume all resources without any control and create problems across the cluster. For this, you can control the minimum and maximum values to be used by defining request and limit values in your containers. If your cluster is large and you want to set policies on both total and container basis for namespaces, you can centrally control the resources used by your applications through ResourceQuota and LimitRange.